Note to the reader: This is a revised edition of a paper published in Cerebral Cortex (1994;7:453–464. The definitive original print version is copyright ©1994 by Oxford University Press.

New figures, text, and links have been incorporated into the revision. Revised HTML (http://nervenet.org/netpapers/Rosen/AudDisc94/AudDisc.html) copyright ©2000 by Glenn D. Rosen

INDUCED MICROGYRIA AND AUDITORY TEMPORAL PROCESSING IN RATS: A MODEL FOR LANGUAGE IMPAIRMENT?

Roslyn Holly Fitch,* Paula Tallal,* Christine P. Brown,* Albert M. Galaburda,† & Glenn D. Rosen†

*Center for Molecular and Behavioral Neuroscience, Rutgers University, 197 University Ave., Newark, NJ 07102. †Dyslexia Research Laboratory and Charles A. Dana Research Institute, Beth Israel Deaconess Medical Center; Division of Behavioral Neurology , Beth Israel Deaconess Medical Center, 330 Brookline Avenue, Boston MA 02215; Harvard Medical School, Boston, MA 02115.

Please address correspondence to:

Roslyn Holly Fitch

Biobehavioral Graduate Program

3310 Horsebarn Hill Road

Box U-154

University of Connecticut

Storrs, CT 06268-0154

Email: hfitch@psych.psy.uconn.edu

Studies have shown the existence of minor developmental cortical malformations, including microgyria, in the brains of dyslexics. Concomitant studies have shown that language impaired individuals exhibit severe deficits in the discrimination of rapidly presented auditory stimuli, including phonological and non-verbal stimuli (i.e., sequential tones). In an effort to relate these results, male rats with neonatally induced microgyria were tested in an operant paradigm for auditory discrimination of stimuli consisting of 2 sequential tones. Subjects were shaped to perform a go-no go target identification, using water reinforcement. Stimuli were reduced in duration from 750 to 375 ms across 24 days of testing. Results showed that all subjects were able to discriminate at longer stimulus durations. However, bilaterally lesioned subjects showed specific impairment at stimulus durations of 500 ms or less, and were significantly depressed in comparison to shams. Right and left lesioned subjects were significantly depressed in comparison to shams at the shortest duration (375 ms). These results suggest a possible link between the neuropathologic anomalies and the auditory temporal processing deficits reported for language impaired individuals

Developmental language disorders, including developmental dyslexia, are characterized by discrepant or unusually low language ability despite normal intelligence, motivation, and instruction. Investigations of potential biologic substrates underlying language impairment (LI) have focused on both anatomical and physiological differences between language-impaired and control individuals. For example, developmental neuropathologic anomalies have been seen in the brains of dyslexics (1-3). These anomalies, although present bilaterally, are found predominantly in frontal and perisylvian regions of the left hemisphere, and include ectopic collections of neurons in the molecular layer, dysplasias, myelinated scars, and polymicrogyria. Although the relationship between these anomalies and the behavioral deficits seen in dyslexia cannot be tested directly in humans, experiments with autoimmune strains of mice, 15-40% of whom spontaneously develop molecular layer ectopias (4,5), have demonstrated some clear correlations between behavior and anatomy. For example, left-pawed ectopic mice perform better than their right-pawed counterparts on a water escape task, but worse on a discrimination learning paradigm (6). Ectopic mice in general perform worse in Morris Maze and black-white discrimination learning tasks, although these deficiencies can be ameliorated by post-weaning enrichment (7).

Other research has probed the substrates of language impairment by focusing on the functional underpinnings of LI, hypothesizing that dysfunction at a sensory processing level may have cascading developmental effects, and hence lead to higher-order language problems. These studies have demonstrated that a large sub-group of language impaired children exhibit severe deficits in the ability to perform auditory discriminations of information presented sequentially within a brief time window (under 500 ms). Although this deficit is profoundly evident when children with LI are asked to discriminate speech stimuli characterized by brief temporal components (e.g., consonant-vowel syllables, Refs 8-10), the presented material need not be linguistic in order for the deficit to be observed. While normal children are able to discriminate two 75 ms tones separated by as little as 8 ms, language impaired children require over a 350 ms interval to perform this same basic discrimination (9). These and other findings (8,11-18) strongly suggest a fundamental dysfunction of the basic ability to perform rapid auditory discriminations in individuals with LI. Subsequent studies have demonstrated similar temporal integration deficits in the visual and tactile modalities, as well as in the performance of sequential motor functions (19-22). These results suggest that a fundamental sensory/motor deficit in temporal integration may characterize LI, although Tallal (23) has hypothesized that it is specifically deficits in the auditory modality which disrupt phonological processing and speech perception and, consequently, result in abnormal language acquisition.

In addition to these processing deficits observed in language impaired children, defects in the processing of rapid information in the visual system have been observed in developmental dyslexics who also do poorly on tests requiring rapid visual processing (24,25). Several recent studies have reported that dyslexics exhibit diminished visual evoked potentials to rapidly changing, low-contrast stimuli, but normal responses to slowly changing or static, high-contrast stimuli (26). Similar response differences between controls and dyslexics were observed using stimuli of differing spatial frequency (27). This response pattern is consistent with impaired functioning of the magnocellular, but not the parvocellular, division of the visual pathway. Such an interpretation is supported by the fact that neurons of the magnocellular layers of the lateral geniculate nucleus (LGN) were found to be, on average, 27% smaller in dyslexic brains (26). Interestingly, these same dyslexic brains exhibited neuropathologic anomalies as described above.

The relationship between developmental neuropathologic anomalies and functional deficits in “rapid processing,” both of which characterize language impaired individuals, has never been directly tested. The purpose of the current experiment was to merge these two approaches to the study of developmental language impairment via an experimental animal model. It has previously been shown that both molecular layer ectopias and microgyria can be induced in otherwise normal rats by neonatal damage (stab wounds and freezing lesions, respectively), and that these induced anomalies are neuroanatomically similar to those appearing spontaneously in both dyslexic humans and immune-disordered mice (28-31). In the current study, male rats who had received neonatal microgyric lesions to either left, right, or both neocortices, as well as sham operated controls, were assessed for performance on an auditory discrimination task in which the temporal parameters of the stimulus were manipulated. Our hypothesis was that microgyric lesions of the neocortex might specifically impair the ability of subjects to perform auditory discriminations at shorter stimulus durations. Further, we wished to investigate whether the left hemisphere specialization for auditory temporal processing observed in a variety of species, including humans (32-41), and specifically for intact male rats tested in a paradigm similar to that described here (42), would be evident using this preparation.

Induction of Focal Necrotic Lesions

Six time-mated female Wistar rats (Charles River Laboratories, Wilmington, MA) were delivered to the laboratory of GDR and AMG on days 16-18 of gestation. On the day after birth (P1), litters were culled to 10, maximizing for males (range = 4-8), and the male pups randomly assigned to one of four groups; left, right, or bilateral freezing lesions or sham surgery. Focal necrotic lesions were then induced based on a modification of the technique employed by Dvorák and colleagues (43,44), and reported in detail elsewhere (28,30). Briefly, pups were anesthetized via induction of hypothermia, and a small incision was made in the antero-posterior plane of the skin over the left or right cerebral hemisphere, exposing the skull. For bilateral lesions, a midline incision was made. A cooled (&Mac197; -70°C) 2 mm diameter stainless steel probe was placed on the skull of lesion subjects, approximately midway between bregma and lambda, for 5 seconds. For bilateral lesions, the first hemisphere to receive the freezing lesion was randomly assigned. Sham subjects were prepared as above, except that the probe was maintained at room temperature. After placement of the probe the skin was quickly sutured, subjects were uniquely marked with ink injections to the footpads, warmed under a lamp and returned to the mother. Assignment of subjects to one of the four treatment groups was balanced within a litter, so that each litter had at least one member of each group.

Litters were weaned on P21 and the subjects group-housed (2-3/cage) with littermates until P45, when 24 males (6 subjects from each treatment group/4 subjects from each litter) were individually marked with picric acid and shipped to RHF.

Behavioral Testing

Upon receipt, subjects were individually housed in tubs. The behavioral testing was performed blind with respect to group. At approximately P70, subjects were put on a water restricted schedule, and received ad libitum access to water for only 15 min. per day.

Subjects were then introduced to a modified operant conditioning apparatus for training sessions of 30 to 40 minutes per day. The test apparatus consisted of a plexiglass box, modified by the attachment of a plexiglass tube of sufficient diameter to accommodate the head of an adult rat. The face of the tube was affixed to a plate containing a mechanical switch which the rat could operate with his nose, and a drinking tube below the switch. Miniature Sony speakers were affixed bilaterally (via o-rings) over holes drilled in the plexiglass tube. This apparatus was custom designed to allow shaping of subjects through a series of phases controlled by a Macintosh IIci computer. Subjects were trained to insert their head into the tube in a relatively straight position (breaking an emitter-detector beam), and to hold this position for a period of 1000 ms before pressing the illuminated nose-button to obtain a water reward. Subjects received white-noise feedback (via the speakers affixed to the tube) to indicate correct positioning. Once able to consistently perform this task (48 trials/session), subjects were introduced to the auditory discrimination paradigm.

Testing consisted of a go-no go target identification task. Once in position, the subject was exposed to an auditory stimulus that consisted of a two-tone sequence. The subject was required to assess whether this stimulus was his “target” (reinforced), or a non-target sequence (not reinforced). The full presentation of the stimulus was contingent upon proper head-placement of the subject; removal of the head during stimulus presentation resulted in an aborted trial, and a 5 s time-out (all lights extinguished). The same tone sequence was then presented on the next trial. If proper head-position was maintained for the duration of stimulus presentation, then the nose-button was illuminated for a 3 s response interval. A press following the subject’s target resulted in the presentation of water, while a press following a negative sequence resulted in a time-out of 45 s.

The stimuli were generated by a Macintosh IIci computer (Apple Computer, Inc., Cupertino, CA), and were composed of two sine-wave tones 250 ms in duration, separated by an interstimulus interval (ISI) of 250 ms. The low tone was 1100 hertz and the high tone was 2300 hertz, presented at a supra-threshold intensity of 75 decibels; these stimuli were identical to those which had previously been used to show a right ear advantage for auditory discrimination in adult male rats (42). Only Hi-Lo or Lo-Hi sequences were assigned as targets, and these were counterbalanced across animals and remained constant for each subject across testing sessions. Negative sequences included Lo-Lo, Hi-Hi, and the opposite mixed pair. Presentation of target and non-target stimuli in a test session was random with the constraint that half of the presentations be target (to maintain motivation), and that no more than 3 target or non-target sequences occur in succession. Right and left audio speakers were alternated daily to prevent bias via differences in speaker properties. Each daily session consisted of forty-eight trials.

After 6 days of testing at the above stimulus parameters, the ISI for all sequences (including targets and non-targets) was reduced to 100 ms. All other parameters, including the assignment of each subject's target, remained constant. At the end of 6 days, the duration of the tones within the stimulus sequence were reduced from 250 to 200 ms each. After another 6 days of testing the tone durations were reduced to 150 ms and the ISI was reduced to 75 ms, for the final 6 days of testing. There were a total of 24 days of testing, with 6 days at each of 4 conditions defined by incrementally decreased stimulus duration.

For each test session the sequence of presentation on each trial, and the corresponding response type (hit, false alarm, correct rejection or miss) and latency to respond, were recorded by a Macintosh IIci computer. All phases of training were controlled by programs written in the software program LabView (National Instruments, Austin, TX) specifically for this purpose.

Histology

After the completion of testing subjects were deeply anesthetized with ketamine and xylazine, and were transcardially perfused with 0.9% saline and 10% formalin. The skulls were extracted, placed in 10% formalin, and shipped to GDR. There, the brains were removed from the skulls and were placed into fresh 10% formalin for 7 days, before being dehydrated in a series of graded alcohols and embedded in 12% celloidin (c.f., Ref. 5). Serial sections were cut coronally at 40 µm and a series of every 10th section was stained for Nissl substance with cresyllecht violet. Using a drawing tube attached to a Zeiss Universal photomicroscope (Carl Zeiss, Inc., New York), both neocortical hemispheres were drawn from the frontal to occipital pole. In addition, the damaged area was traced starting from the first section which showed any architectonic distortion and proceeding until the distortion had unambiguously disappeared. Previous research (28,30) had demonstrated that the appearance of the area of damage with Nissl stains correlated quite well with assessment of damage as seen with various immunocytochemical stains (i.e., glial fibrillary acidic protein, glutamate, and 68 KDa neurofilament). The area of the neocortical hemispheres, as well as that of the damaged area, were measured from these drawings using a Zeiss MOP-3 Electronic Planimeter interfaced to a Macintosh Plus computer. Total neocortical volume and microgyric volume was determined using Cavalieri’s estimation (45). In rare situations where, because of missing or damaged sections the equi-spaced criteria required for Cavalieri’s estimator was not met, a measurement method involving piece-wise parabolic integration was employed (45). The architectonic location of the lesion was also quantified by overlaying the topographic location on a normalized flattened map of the neocortex derived from Zilles (46).

Histology

Histology confirmed the expected location of cortical injury in 22 of the 24 subjects (Figure 1). However, it was determined that two subjects (a sham and a left-lesion) had been interchanged at some point during the study. Since the exchange could have occurred either before or after behavioral testing, these two subjects were dropped from further analyses. Thus the final n per group was; sham=5, right-lesion=6, left-lesion=5, bilateral-lesion=6.

|

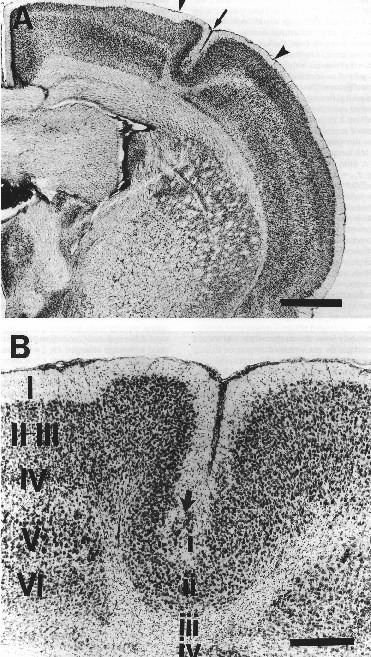

Figure 1. A. Low-power photomicrograph of Nissl-stained section showing hemisphere with microgyria with microsulcus (arrow) induced by a P1 freezing lesion. Note the focal nature of the cortical damage (arrowheads). Bar = 1 mm. B. High-power photomicrograph of same microgyria in A (arrow) contrasting the undisturbed six layer cortex medial to the damaged area (large roman numerals) with the four-layed microgyria (small roman numerals). Large arrow denotes ectopic neurons in layer i of the microgyria. Bar = 250 µm. |

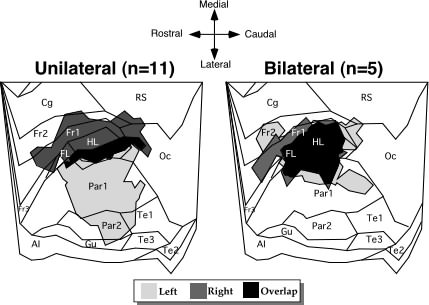

The degree of neocortical damage was equivalent in all surgical groups. Thus, the percent of damage in the neocortical hemispheres did not differ between right-lesioned and left-lesioned subjects (Mean ± SEM = 5.04 ± 0.64 vs. 7.16 ± 1.52, respectively; F1,9 = 1.88, ns). Repeated measures ANOVA demonstrated no difference in the percent of damage between the right and left hemispheres of bilaterally lesioned animals (Mean ± SEM = 4.79 ± 0.65 vs. 5.43 ± 0.81, respectively; F1,5 = 3.61, ns). The range of topographic location of the lesions, shown in Figure 2, demonstrates the relative symmetry of damage in bilaterally injured animals. The distribution of the lesion location of those sustaining unilateral left hemisphere lesions, however, was more medial than their unilateral right hemisphere-lesioned counterparts, and their regions of overlap were quite small. Whereas the microgyria in the right hemisphere involved some frontal, hindlimb, and forelimb regions of the somatosensory cortex, the left hemisphere locations were mostly confined to lateral portions of the somatosensory cortex.

|

| Figure 2. Overall topographic location of microgyria (shaded areas) from the left and right hemispheres of unilaterally and bilaterally lesioned animals placed over a flattened map of the neocortex derived from Zilles (46). The unilateral portion of the figure represents the 5 left-lesioned and 6 right-lesioned rats. Abbreviations: AI - agranular insular (includes dorsal, posterior, and ventral part); Cg - cingulate cortex (included Cg1-3); FL - forelimb area; Fr1, Fr2, Fr3 - frontal cortex areas 1,2, and 3 respectively; Gu - gustatory cortex; HL - hindlimb area; Oc - occipital cortex (includes all subdivisions of Oc1 and Oc2); Par1 and Par2 - primary and secondary somatosentory cortices, respectively; PRh - perirhinal area; RS - retrosplenial cortex (includes granular and agranular subdivisions); Te1, Te2, and Te3 - primary auditory cortex, and temporal areas 2 and 3, repsectively. |

Behavior

It has previously been shown that while rats trained in this paradigm do not withhold responses to negative sequences (the incidence of correct rejections and misses combined being less than 1% of responses), response latencies following the presentation of target versus non-target do show significant differences (42,47). Specifically, latencies to respond to the target (hits) have been shown to be significantly shorter than latencies for incorrect responses to non-target sequences (false alarms) when discrimination occurs. This effect has also been demonstrated for human babies tested in an operant discrimination paradigm using similar auditory stimuli (48). Since a stimulus-specific difference in response latencies can only exist where subjects actually differentiate the stimuli, a significant false alarm/hit difference validates subject discrimination (42).

Based on this method, response latencies for hits and false alarms (FA) were analyzed across days (six days of testing), treatment groups (right, left, bilateral or sham), and stimulus conditions (four stimulus conditions), in a multifactorial ANOVA. In addition, the FA/hit latencies were analyzed across conditions within each treatment group separately, to delineate the pattern of discrimination for each individual group. Finally, mean FA/hit differences for individual subjects were examined, to verify that the observed effects were not sample-dependent or the result of outlier values.

Discrimination Between Groups

Sham versus Lesioned Subjects. An ANOVA was performed, using the mean response latency for false alarms and hits for each subject on each day as the dependent measure. Group was treated as a between variable with 2 levels, sham (n=5) and lesioned (combining right, left, and bilateral lesions, n=17). Condition was treated as a within variable with 4 levels (condition 1; tone 1=250 ms/ISI=250 ms/tone 2=250 ms: cond. 2; 250/100/250: cond. 3; 200/100/200: and cond. 4; 150/75/150). Day and Response Type (FA versus hit) were treated as within variables with 6 and 2 levels, respectively.

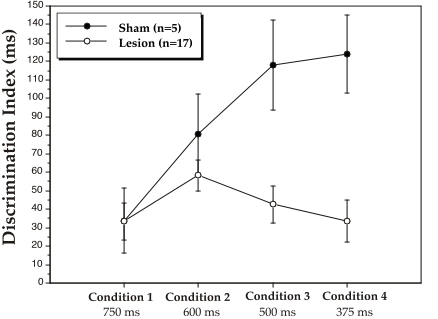

There was a highly significant effect of Response Type (F1,20 = 55.8, P < .001), confirming that false alarm latencies were significantly longer than hit latencies. As discussed above, this FA/hit difference represents significant discrimination of the stimuli. An interaction between Group X Response Type (F1,20 = 7.2, P < .02), however, demonstrated that the FA/hit difference was significantly larger for shams as compared to lesioned subjects. Furthermore, an interaction between Condition X Group X Response Type (F3,60 = 2.8, P < .05) indicated that this treatment effect on discrimination was specific to the shorter stimulus durations. Figure 3 depicts this effect by plotting discrimination indices (FA/hit differences in ms) as a function of condition and group. At conditions 1 and 2, simple effects of Group on discrimination index were non-significant, showing that both groups performed the discrimination equally well. However, as stimulus duration was further reduced (conditions 3 and 4), a significant sham advantageemerged (F1,20 = 5.0 and F1,20 = 7.4 respectively, P < .05).

|

| Figure 3. Mean discrimination indices, as calculated by false alarm minus hit latency (in ms), for sham and lesion groups at the 4 stimulus duration conditions. The numbers under each condition are total stimulus time (pre-tone/ISI/post-tone). Discrimination indices are mean scores over 6 days of testing at each condition. |

Shams versus Right, Left, and Bilateral Lesioned Subjects. To determine the influence of lesion site on these effects, an ANOVA was performed as above but with a breakdown of all 4 treatment groups: sham (n=5), right-lesion (n=6), left-lesion (n=5), and bilateral-lesion (n=6). Thus, Group was a between variable with 4 levels, and Condition, Day and Response Type were within variables with 4, 6, and 2 levels respectively.

As above, Response Type was found to be highly significant (F1,18 = 53.4, P < .001). In addition, the Group X Response Type interaction was near-significant (F3,18 = 3.1, P = .055), reflecting the fact that the FA/hit difference was largest for shams, and smallest for bilaterally lesioned subjects. Also, a near-significant Condition X Group X Response Type interaction was found (F9,54 = 2.0, P =.064), consistent with group differences only at the shorter stimulus durations (see Figure 4).

|

| Figure 4. Mean discrimination index, as calculated by the false alarm minus hit difference (in ms), for sham, right lesion, left lesion, and bilateral lesion groups at the 4 stimulus duration conditions. The numbers under each condition are total stimulus time (pre-tone/ISI/post-tone). Discrimination indices are mean scores over 6 days of testing at each condition. |

Further assessment of this interaction via simple effects analyses of FA/hit differences (i.e., discrimination indices) between each group revealed the following. First, discrimination indices were significantly larger (indicating better discrimination) for shams as compared to bilaterally lesioned subjects at the shorter stimulus durations, conditions 3 (F1,9 = 8.68, P < .02) and 4 (F1,9 = 6.7, P < .05). Interestingly, the discrimination indices were also significantly larger for right-lesioned subjects as compared to bilaterally lesioned subjects at condition 3 (F1,10 = 5.05, P < .05). In addition, discrimination indices were significantly larger for shams as compared to right-lesioned subjects (F1,9 = 6.2, P < .05), and were near-significant for the sham/left-lesion comparison (F1,8 = 5.2, P = .052), at the shortest stimulus duration, condition 4.

Discrimination within each Group

To determine whether subjects within each group were discriminating significantly at each stimulus duration, FA versus hit latencies were analyzed via ANOVA within each group separately. Since it has been shown in similar paradigms that false alarm latencies are significantly longer than for hits (42,47), one-tailed analyses were used to assess main effects of Response Type. Condition was again treated as a repeated measure with 4 levels. In addition, the six days of testing at each condition were broken into two sub-blocks of 3 days, to extract the effect of “initial” versus “experienced” performance at each condition. Thus Block was a repeated measure with 2 levels, and Day was a repeated measure (within Block) with 3 levels.

Shams. The main effect of Response Type was highly significant (F1,4 = 20.4, P < .01), reflecting discrimination of the target within the sham group. The Condition X Response Type interaction was also significant (F3,12 = 3.9, P < .05), with discrimination indices (FA/hit differences) increasing for this group across conditions (see Figure 4). This appears to reflect a general learning curve for shams across the 24 days of testing, despite the decreasing duration of stimuli used across conditions.

Simple effects of discrimination (FA versus hit latency) were examined at each of the 4 conditions. The FA/hit difference was not significant at condition 1, but was significant thereafter at condition 2 (F1,4 = 6.9, P < .05), condition 3 (F1,4 = 13.7, P < .02), and condition 4 (F1,4 = 36.9, P < .005; see Figure 4), indicating that shams were able to discriminate at each of these conditions. There were no Day or Block effects for this group.

Right Lesions. The main effect of Response Type was significant (F1,5 = 18.7, P < .005), reflecting discrimination of the target within the right lesion group. The Condition X Response Type interaction was not significant, reflecting the fact that the discrimination index stayed relatively constant across conditions (see Figure 4). Simple effects of discrimination at each of the 4 conditions showed that, like shams, the FA/hit difference was not significant at condition 1, but was significant thereafter at condition 2 (F1,5 = 14.2, P < .01), condition 3 (F1,5 = 13.61, P < .01), and condition 4 (F1,5 = 9.2, P < .02; see Figure 4). There were no Day or Block effects for this group.

Left Lesions. The main effect of Response Type was again significant (F1,4 = 28.07, P < .005), reflecting significant discrimination of the target within the left lesion group. Simple effects analysis of discrimination at each of the 4 conditions revealed that the FA/hit difference was near-significant at condition 1 (F1,4= 4.1, P =.057), and was significant at conditions 2 (F1,4 = 19.7, P < .01) and 3 (F1,4 = 5.5, P <.05), but was not significant at condition 4 (F1,5 = 0.9, P = .38; see Figure 4). These results indicate that subjects with left lesions were able to discriminate at the longer durations, but were specifically impaired at the shortest stimulus duration. There were no Day or Block effects for this group.

Bilateral Lesions. The pattern of discrimination for subjects having received bilateral lesions was clearly distinct from the other 3 groups. First, the effect of overall Response Type was only marginal (F1,5 = 2.7, P =.08). Examination of simple effects of Response Type within each condition showed that FA latencies were significantly longer than for hits at conditions 1 (F1,5 = 7.8, P < .02), and 2 (F1,5 = 22.78, P < .01), but were non-significant at conditions 3 and 4 (F1,5 = 0.02 and 0.11 respectively; see Figure 4). These results also indicate that bilaterally lesioned subjects were able to discriminate at the longer stimulus durations, but exhibited specific impairment at the shorter stimulus durations.

Interestingly, a highly significant Block X Response Type interaction (F1,5 = 23.44, P < .01) was found for this group, reflecting the fact that bilaterally-lesioned animals averaged a discrimination score close to zero (chance) for the first 3 days of testing at all 4 conditions, and then showed marked improvement by the final 3 days at each condition (although not sufficient improvement to attain significant discrimination at the second block in conditions 3 and 4). Such a pattern of “step” learning contrasted the relatively stable patterns of discrimination observed across days for sham and right lesioned subjects at each condition.

Distribution of Discrimination Scores within Groups

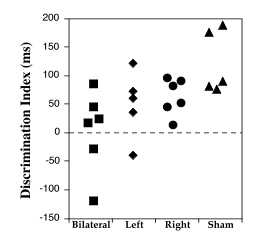

Figure 5 demonstrates that there was almost no overlap between mean discrimination scores of bilaterally lesioned and sham subjects, and minimal overlap between left-lesioned subjects and shams, when individual discrimination indices across conditions 3 and 4 (where group differences emerged) were plotted. There were no apparent outlier values in any group.

|

Figure 5. Distribution of discrimination indices for individual subjects by treatment group, across Days 13-24 (Conditions 3 and 4). |

Auditory Discrimination and Microgyria

The results can be summarized as follows: (1) All groups showed significant discrimination at the longer stimulus durations as measured by latency differences between FA and hits (c.f. Ref. 42); (2) Lesion subjects (combined) demonstrated depressed discrimination as compared to shams, specifically at the 2 shorter stimulus durations (conditions 3 and 4); (3) This effect was most marked for subjects with bilateral lesions, who failed to discriminate significantly at the 2 shorter stimulus durations. Subjects with unilateral lesions of the left or right hemisphere were also depressed as compared to shams at the shortest stimulus duration, and left-lesioned subjects showed no significant discrimination at this duration; (4) Bilaterally-lesioned subjects discriminated at chance levels during the first few days at all conditions, and improved to show overall significant discrimination only for the longer stimulus durations, conditions 1 and 2.

These results support the hypothesis that neonatal microgyric lesions of neocortex specifically impair the ability of subjects to perform rapid auditory discriminations in adulthood. Furthermore, subjects showed the most marked impairment if these lesions were bilateral. Left or right hemispheric lesions depressed discrimination at the shortest stimulus duration, although subjects with right hemisphere lesions still showed some significant discrimination while left lesioned subjects did not. This suggestion of left hemisphere specific effects is consistent with evidence that intact adult male rats exhibit a right-ear advantage for discriminating identical auditory stimuli (42). However, it should be noted that: (1) lesion location for subjects with left and right lesions was not identical (see Figure 2); and (2) discrimination indices for left and right lesioned groups did not differ significantly at any condition in the current study. Consequently any interpretations regarding hemispheric specialization demonstrated by this paradigm will require further assessment.

The fact that discrimination deficits due to microgyric lesions emerged when the total stimulus duration dropped to 500 ms or less (condition 3 = 500 ms; two 200 ms tones and a 100 ms ISI: condition 4 = 375 ms; two 150 ms tones and a 75 ms ISI: see Figures 3 and 4) is particularly interesting in light of comparable data obtained from human clinical populations on similar auditory tasks. Specifically, when language-impaired and matched-control children were asked to perform a two-tone sequence discrimination similar to the current task, both groups performed at or near 100% correct as long as the total stimulus duration was at or above 578 ms (two 75 ms tones and a 428 ms ISI). However, group differences became evident when the total stimulus duration was reduced to 500 ms (two 75 ms tones and a 350 ms ISI) or less, with language impaired children performing significantly worse than controls. At stimulus durations below 500 ms, control children performed near 100% correct while language impaired children performed the discrimination only slightly above chance levels (9). In a related study, adults with acquired lesions of the right or left hemisphere, as well as matched controls, were tested on the same auditory task administered to language impaired children. Discrimination deficits were found only for the left-lesioned group, again at stimulus durations below 500 ms (49).

The results of the current animal study forms an exciting parallel to data obtained from clinical studies of language impaired individuals. The results of such clinical research provided the basis for asserting that specific deficits in auditory temporal processing, in the time range of 500 ms or less, may interfere with primary speech perception and have cascading effects leading to language and reading dysfunction (see Ref. 23). The current results further suggest that neonatally induced anomalies in neocortical development, as found in the postmortem pathology of diagnosed dyslexics, may be a significant causal factor in this temporal dysfunction.

Anatomic Substrates of Auditory Temporal Discrimination

Early studies on auditory cortical lesions in adult cats showed that such lesions resulted in specific impairment of discrimination for sequences of tones differing only in temporal pattern (see also Refs. 50,51 for review ). More recent studies have shown simlar effects following auditory cortical lesions in adult monkeys (32,35,37). Studies on the effects of auditory cortical lesions in rats are limited, but some recent research has shown that such lesions impaired the ability of adult rats to discriminate patterns of a 4 KHz tone (52). Given these findings which strongly suggest that functions relating to auditory temporal discrimination are “localized” in auditory cortical regions in mammalian species, one must question the mechanisms underlying the current results, since the majority of microgyric lesions were induced in somatosensory-somatomotor (SM) cortices and not auditory cortex.

It is likely that lesions of developing SM cortex resulted in profound reorganization of inter-cortical and cortical-subcortical connections, including projections critical to auditory temporal discrimination functions. Consistent with this interpretation, researchers have shown that neonatal damage to developing neural structures leads to profound and sometimes pervasive reorganization of the brain (53,54). Similarly, anomalous connectivity has been shown following spontaneous (55) and induced (56-58) microgyria. Innocenti (56) reported that microgyria induced by ibotenic acid injections in the visual cortex of cats resulted in the maintenance of transient auditory projections (that is, maintenance of projections normally pruned during development). An increase in callosal connectivity associated with a region of microgyria, as well as more pervasive disturbances, have also been reported (55,57). Similarly, induction of cerebral hypoxia by carotid ligation in neonatal cats results in a marked increase in efferent projections from visual cortex to the opposite hemisphere (59). These findings support the notion that the hypoxic-ischemic freezing injury resulting in microgyria might cause profound and pervasive disturbances in cortical connectivity. These effects may be mediated by the maintenance of otherwise transient connections. Such effects would parallel the reorganization observed following unilateral removal of the vibrissae, which results in the maintenance of a transient auditory projection from the magnocellular portion of the medial geniculate to the barrel field (60).

In a more general vein, the notion that developmental neuropathologic anomalies such as induced microgyria may be associated with subtle yet pervasive reorganization of neural connectivity patterns is appealing. Such an assertion is consistent with observations that despite the lack of profound, gross anatomical disturbances (i.e., frank lesions or anatomical malformations) in individuals with LI, more subtle anomalies have been demonstrated in a variety of systems, at both the neocortical and subcortical levels (1,2,26,61,62). Given the existence of these concomitant anomalies, one might suggest that both induced and spontaneous developmental cortical lesions result in disruption of retrograde or afferent patterns of thalamocortical connectivity, disrupting in turn local thalamic organization. Consistent with this hypothesis, induced freezing lesions of the cortical plate occur at a time-point corresponding to the arrival of thalamocortical projections beginning to arborize (63). Furthermore, Rosen et al. (32) have demonstrated that neurons from layers V and VI, from which cortical efferents to the thalamus normally arise, are specifically destroyed by P0 freezing injury. Additional anatomic research will be needed, however, to assess the effect on thalamic afferent neurons of early cortical lesion and microgyria.

The notion of pervasive neural reorganization is further consistent with reports that the temporal processing difficulties experienced by individuals with LI are multi-modal, again suggesting pervasive and/or sub-cortical anomalies (19-22). In conclusion, the mechanisms whereby developmental pathologies lead to dysfunction of rapid temporal processing in rats and individuals with LI may involve pervasive reorganization through cortical and subcortical regions, leading in turn to the specific behavioral deficits observed.

Summary and Conclusions

ndividuals with LI, including dyslexia, show deficits in the “rapid processing” of information in both the auditory and visual modalities. In addition, the brains of developmental dyslexics show a wide variety of minor cortical and subcortical focal anatomical malformations, some of which are the result of injury occurring during development. The goal of the current experiment was to examine, in a rat model, whether such minor anatomical malformations could affect “rapid processing” in an auditory discrimination task similar to that which had previously been shown to differentiate language impaired from control individuals. Our current results demonstrate that the presence of focal developmental neuropathologic lesions disrupted performance of an auditory discrimination task in rats. This deficit appeared to be specific to the discrimination of rapidly presented stimuli (total stimulus duration at or below 500 ms)—a finding remarkably similar to results obtained from children with LI. Rats who received bilateral damage had the most profound disruption of performance on the discrimination task, although subjects with unilateral lesions also exhibited significant disruption at the shortest duration. We hypothesize that this deficit in “rapid processing” may be related to the profound changes in connectivity associated with developmental neocortical damage.

ACKNOWLEDGMENTS

his work was supported by an award from the McDonnell Pew Charitable Trusts to RHF, by NIH grant HD20806 to GDR and AMG, and by NIH grant NS27119 to AMG. The authors wish to acknowledge the technical assistance of Judy M. Richman and Antis Zalkalns.